TEAMCLOUD

GPU-NOW AS A SERVICE

TeamCloud GPU-Now as a Service for AI in Malaysia

Accelerate your AI projects with TeamCloud’s GPU-Now, an on-demand and cost-effective GPU as a Service (GPUaaS), specially tailored for small to mid-sized AI models. Enjoy access to high-performance GPUs without breaking the bank.

Overcoming AI Project Challenges

Sustaining AI projects can be a challenge, especially when returns on investment take 2-5 years to materialize. Early investments in costly GPU hardware can drain resources—particularly during the trial-and-error phase when selecting the right GPU model. That’s where TeamCloud GPU-Now steps in.

Key Features of -TeamCloud GPU-Now:

-

GPU as a Service (GPUaaS):

Powered by OpenStack technology, GPU-Now offers on-demand access to high-performance GPUs like RTX 3090, RTX 4090, RTX 6000 Ada, and H200 NVL—without upfront costs or complex setups. -

Tailored for AI Development:

Designed specifically for AI developers, researchers, and enterprises working with small-to-mid-sized AI models. -

Flexible Payment Options:

Choose between pay-per-use or subscription models for efficient scaling of your AI projects. -

Fully Managed & Hosted in Malaysia:

TeamCloud GPU-Now ensures data sovereignty compliance while making AI development more cost-efficient and scalable. -

Seamless AI Training and Deployment:

Efficiently train, fine-tune, and deploy your AI models with minimal hassle.

With TeamCloud GPU-Now, you can access cutting-edge GPUs tailored for your needs, scale your projects easily, and ensure long-term AI success—all without the financial burden of owning expensive hardware.

The Challenges of AI: High Costs, Complex Infrastructure, and Data Risks

High Cost of GPUs

The high cost of GPUs presents a significant barrier for businesses and researchers looking to train complex models, run deep learning algorithms, perform natural language processing (NLP), or conduct scientific simulations. The expensive upfront investment required for high-performance GPUs can strain resources, especially during the trial-and-error phase of AI development, making it difficult to scale AI projects effectively and affordably.

Infrastructure Design and Maintenance

AI/ML workloads demand specialized networking, storage, and compute resources, which can be complex to configure and maintain for optimal performance. Ensuring a reliable infrastructure for AI projects is crucial, as hardware failures and GPU degradation can lead to disruptions, causing delays and affecting the overall efficiency of AI model training and deployment. Proper infrastructure design and regular maintenance are key to preventing these issues.

Data Sovereignty and Compliance

For industries with stringent data regulations, running AI/ML projects across borders can be difficult due to concerns over data sovereignty and compliance. TeamCloud GPU-Now addresses these challenges by ensuring that your data remains securely stored within Malaysia, in full compliance with local laws. This eliminates risks associated with cross-border data transfers, providing a secure environment for your AI projects.

Protecting Your AI Data—Hourly, Daily, and Weekly

When developing AI models or processing real-time data, the last thing you should worry about is data loss. With TeamCloud GPU-Now, you can focus on your projects with peace of mind, knowing your data is safely protected through automated tiered backups.

Key Features of TeamCloud’s Automated Backups:

-

Hourly Backups: Retained for the last 15 hours, ensuring up-to-date protection.

-

Daily Backups: Retained for the last 7 days, offering quick recovery for recent changes.

-

Weekly Backups: Retained for the last 4 weeks, providing long-term protection and easy rollback.

Whether you’re recovering from system failures or safeguarding your evolving AI models, TeamCloud ensures your data is securely protected at every stage.

Benefits of TeamCloud GPU-Now

Cost-Effective GPUaaS

TeamCloud GPU-Now offers affordable, high-performance GPUs with flexible pay-per-use or subscription models, ensuring sustainable costs and predictable pricing. This makes it the ideal solution for long-term AI projects, where managing expenses is crucial while accessing the power of top-tier GPUs.

Scalable On-Demand

With TeamCloud GPU-Now, you can easily scale GPU resources up or down to match the evolving needs of your AI projects. Whether you’re working on small-to-mid-sized AI models or tackling complex deep learning tasks, our flexible scaling ensures that you optimize GPU usage and maximize your ROI.

Peace of Mind

With TeamCloud, you can focus on your core business while we handle your infrastructure. Enjoy 24/7 support and hosting in a secure, high-availability Tier III data center, ensuring your website and services are always online and protected.

Business Continuity

With a 99.9% uptime guaranteed in our Service Level Agreement (SLA), TeamCloud GPU-Now ensures reliable service and uninterrupted performance for your AI projects. We back this up with data redundancy and automated backups, minimizing disruptions and safeguarding against data loss.

Accelerate AI Deployment

Access mid-to-high-end GPUs, including the NVIDIA H200 NVL, to significantly speed up AI training, fine-tuning, and real-time inference. With TeamCloud GPU-Now, reduce iteration time and accelerate the deployment of your AI models, ensuring faster results and improved performance.

Optimized for AI Workloads

TeamCloud GPU-Now strikes the perfect balance between performance, cost, and scalability for small-to-mid-sized AI projects. Whether you’re working with LLMs, generative AI, or scientific simulations, our solution provides the ideal environment for efficient AI model training and deployment.

Fully Managed AI Infrastructure

With TeamCloud GPU-Now, you can focus entirely on your AI projects while we handle all aspects of infrastructure management. From hardware failures and repairs to regular maintenance, we ensure your AI infrastructure runs smoothly and efficiently—giving you peace of mind.

Safe and Secure Domestic Cloud

TeamCloud GPU-Now ensures your data remains local, complying with Malaysia’s data sovereignty laws and global standards such as ISO27017 and PCI-DSS. Enjoy top-tier security and data protection for your AI projects, all while ensuring full compliance with local and international regulations.

Key Features of GPU

01

26+ copies

Data Protection & Backup

TeamCloud GPU-Now ensures your data is always protected with automatic backups every hour, day, and week. In the event of cyberattacks or disasters, quick recovery is guaranteed to maintain uninterrupted AI development and deployment.

02

Dedicated

NVIDIA GPUs

Get access to dedicated, high-performance NVIDIA GPUs, including RTX 3090, RTX 4090, and H200 NVL. These non-shared GPUs accelerate AI/ML training, fine-tuning, and real-time inference, efficiently handling complex workloads and deep learning tasks.

03

Data Redundancy

& Availability

TeamCloud GPU-Now provides high availability and data security with automatic redundancy across multiple data centers, minimizing downtime and safeguarding your AI workloads from unexpected disruptions.

04

Optimized for AI/ML

& HPC Workloads

Designed for demanding AI/ML and high-performance computing (HPC) tasks, TeamCloud GPU-Now ensures high-speed training, precise inference, and reliable performance for complex models and custom applications.

05

Seamless

Model Fine-Tuning

Easily fine-tune your pre-trained models on TeamCloud GPU-Now, enhancing accuracy and performance for your AI applications in a secure and reliable local cloud environment.

06

24/7

Support Assistance

Benefit from 24/7 support from our experienced engineers, ensuring your GPU instances run smoothly with quick issue resolution to maintain optimal performance for your AI projects.

Standard Features

Platform Ease of Use

Infrastructure and Performance

Security and Protection

Instance Management

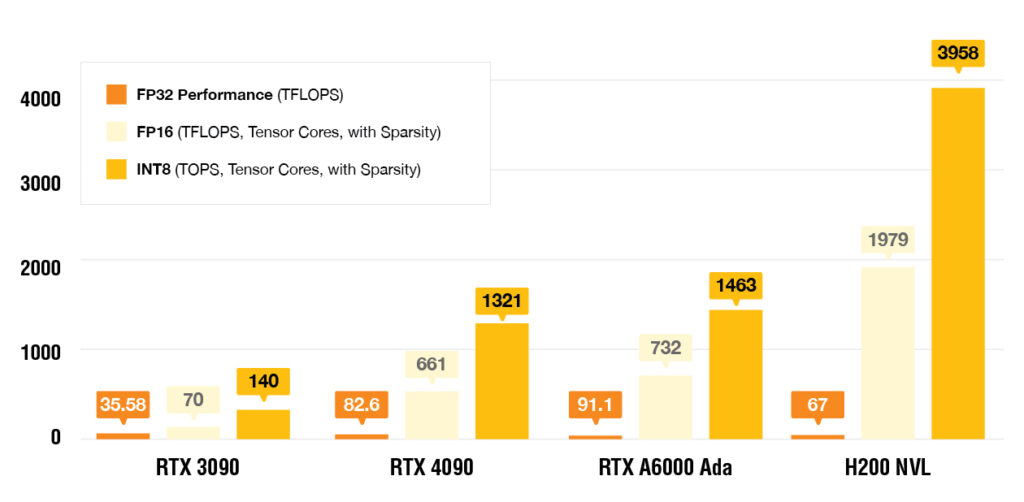

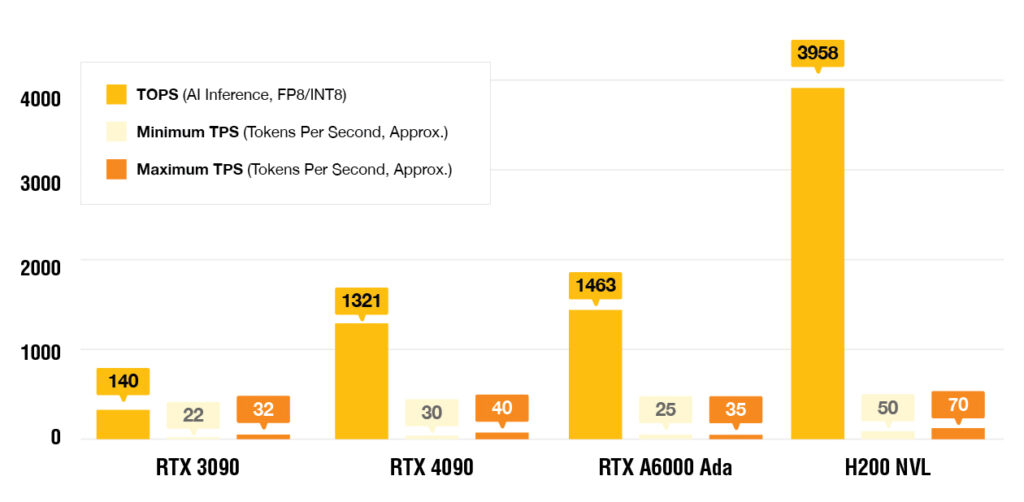

GPUs Benchmark

GPU Compute Performance

AI Processing Power: TOPS vs TPS

Available TeamCloud GPU-Now Options

We offer a range of NVIDIA GPUs options to cater to your specific AI/ML needs:

The NVIDIA GeForce RTX 3090, powered by NVIDIA’s Ampere architecture, is a consumer GPU featuring 24GB of GDDR6X VRAM and 328 Tensor Cores. It offers solid performance for AI/ML workloads, content creation, and gaming, making it an excellent option for enthusiasts and solo developers tackling moderately demanding AI tasks.

✅ Training: Delivers solid speed improvements, around 5–15% faster than its predecessor, with 3rd-gen Tensor Cores providing basic support for deep learning.

✅ Fine-Tuning: Provides moderate performance gains, with improvements depending on the model size and task complexity.

✅ Inference: Handles real-time inference for small to medium AI tasks, though the 24GB VRAM may limit its capacity for larger projects.

The NVIDIA GeForce RTX 4090, built on NVIDIA’s Ada Lovelace architecture, is a high-end consumer GPU featuring 24GB of GDDR6X VRAM and 512 Tensor Cores. It significantly boosts compute performance for AI/ML workloads, gaming, and content creation, making it an ideal choice for users requiring both power and efficiency.

✅ Training: Offers up to 50–70% faster speeds than its predecessor, with 4th-gen Tensor Cores optimizing deep learning tasks and accelerating model training.

✅ Fine-Tuning: Provides substantial performance gains, with improvements depending on model complexity and the specific needs of the task.

✅ Inference: Handles real-time inference across a broad range of AI applications, enhanced by high bandwidth. However, the 24GB VRAM may limit the capacity for very large models.

Industry: AI Chatbot Development

Challenge: Traditional chatbots rely on pre-scripted responses and often fail to retrieve real-time information, providing outdated or generic answers.

Solution: AI developers can utilize TeamCloud GPU-Now to build Retrieval-Augmented Generation (RAG)-enhanced chatbots that combine real-time data retrieval with generative AI. By leveraging GPU-accelerated processing, these chatbots can understand complex queries, fetch up-to-date information, and deliver contextually relevant responses at scale.

The NVIDIA RTX 6000 Ada, a professional-grade GPU from NVIDIA’s Ada Lovelace lineup, features 48GB of GDDR6 VRAM and exceptional compute power. It’s designed for demanding tasks like AI, complex simulations, and 3D rendering, offering enhanced precision and capacity for advanced AI/ML users.

✅ Training: Up to 2x faster than its predecessor, with 4th-gen Tensor Cores driving robust deep learning performance and accelerated model training.

✅ Fine-Tuning: Delivers noticeable performance gains, depending on model size and task complexity, making it ideal for large-scale AI models.

✅ Inference: Excels in real-time inference for advanced AI applications, with extra VRAM supporting larger projects.

*Note: The NVIDIA RTX 6000 Ada is available only on Bare Metal GPU instances for top-tier performance.

The NVIDIA H200 NVL, based on NVIDIA’s Hopper architecture, is a datacenter GPU featuring an impressive 141GB of HBM3e VRAM and 528 Tensor Cores. Designed for next-generation AI/ML, high-performance computing (HPC), and enterprise workloads, it provides exceptional computational power and energy efficiency, with enhanced memory capacity and bandwidth.

TeamCloud GPU-Now Plans and Pricing

Explore TeamCloud GPU-Now plans tailored for your AI needs—accelerate machine learning, deep learning, and data processing with powerful NVIDIA GPUs like the RTX 3090, RTX 4090, and H200 NVL. Enjoy transparent pricing with no setup fees, no hidden charges, and most importantly, dedicated GPU cards (not shared) for maximum performance.

GPU Model: NVIDIA GeForce RTX 3090

| RTX 3090 | |||||||

|---|---|---|---|---|---|---|---|

| PU Count | GPU Memory | CPU | Processor | RAM | Bandwidth | Price/Hour | Price/Month |

| 1 GPU | 1 x 24 GB | 8 core | AMD EPYC™ 9124 | 120 GB | 1Gbps | RM1.96 | RM1,435.02 |

| 2 GPU | 2 x 24 GB | 16 core | AMD EPYC™ 9124 | 240 GB | 1Gbps | RM3.92 | RM2,870.05 |

| 4 GPU | 4 x 24 GB | 32 core | AMD EPYC™ 9124 | 480 GB | 1Gbps | RM7.84 | RM5,740.10 |

GPU Model: NVIDIA GeForce RTX 4090

| RTX 4090 | |||||||

|---|---|---|---|---|---|---|---|

| GPU Count | GPU Memory | CPU | Processor | RAM | Bandwidth | Price/Hour | Price/Month |

| 1 GPU | 1 x 24 GB | 8 core | AMD EPYC™ 9124 | 120 GB | 1Gbps | RM2.64 | RM1,934.98 |

| 1 GPU | 1 x 48 GB | 8 core | AMD EPYC™ 9124 | 120 GB | 1Gbps | RM3.60 | RM2,635.50 |

| 2 GPU | 2 x 24 GB | 16 core | AMD EPYC™ 9124 | 240 GB | 1Gbps | RM5.29 | RM3,869.96 |

| 2 GPU | 2 x 48 GB | 16 core | AMD EPYC™ 9124 | 240 GB | 1Gbps | RM7.20 | RM5,271.01 |

| 4 GPU | 4 x 24 GB | 32 core | AMD EPYC™ 9124 | 480 GB | 1Gbps | RM10.57 | RM7,739.92 |

| 4 GPU | 4 x 48 GB | 32 core | AMD EPYC™ 9124 | 480 GB | 1Gbps | RM14.40 | RM10,542.02 |

GPU Model: NVIDIA H200 NVL Tensor Core

| H200 NVL | |||||||

|---|---|---|---|---|---|---|---|

| U Count | GPU Memory | CPU | Processor | RAM | Bandwidth | Price/Hour | Price/Month |

| 1 GPU | 1 x 141 GB | 32 core | AMD EPYC™ 9354P | 240 GB | 1Gbps | RM19.09 | RM13,3972.33 |

| 2 GPU | 2 x 141 GB | 64 core | AMD EPYC™ 9354P | 480 GB | 1Gbps | RM38.18 | RM27,944.67 |

Upgrade Option

| IP Address | Price / Hour |

| One floating IP address associated with a running instance | Free |

| Additional floating IP address associated with a running instance | RM0.043 |

| One floating IP address not associated with a running instance | RM0.043 |

| One floating IP address remap | Unmetered |

| Data Transfer | Price / Hour / GiB |

| First 1 TiB (*Not applicable to China Premium Route) | Free |

| Up to 10TiB | RM0.44 |

| Next 40TiB | RM0.31 |

| 50TiB onward | RM0.30 |

| Storage | Price / GiB SSD |

| Provision of storage (Inclusive of IOPs) | RM0.60 |

| Licensing | Price / Hour |

| Window License | RM0.175 |

Use Cases for TeamCloud GPU-Now Servers

Common deployment scenarios for TeamCloud GPU-Now.

Industry: Cybersecurity

Challenge: Security engineers are overwhelmed by vast volumes of logs and alerts, struggling with false positives and slow threat detection due to data overload.

Solution: With TeamCloud GPU-Now, AI engineers can fine-tune AI models for anomaly detection, event classification, and real-time threat detection. GPU-accelerated processing helps security teams quickly filter out false positives, prioritize threats, and automate risk assessments, leading to faster and more efficient incident response.

Industry: Finance & Accounting

Challenge: Accountants waste hours manually extracting data from PDF invoices, scanned documents, and emails, making the process slow and prone to errors.

Solution: AI developers can leverage TeamCloud GPU-Now to train AI-powered invoice processing models that automate text extraction, validation, and data entry. With GPU-accelerated Optical Character Recognition (OCR) and Natural Language Processing (NLP), businesses can eliminate manual work, minimize errors, and speed up financial workflows.

Industry: AI Chatbot Development

Challenge: Traditional chatbots rely on pre-scripted responses and often fail to retrieve real-time information, providing outdated or generic answers.

Solution: AI developers can utilize TeamCloud GPU-Now to build Retrieval-Augmented Generation (RAG)-enhanced chatbots that combine real-time data retrieval with generative AI. By leveraging GPU-accelerated processing, these chatbots can understand complex queries, fetch up-to-date information, and deliver contextually relevant responses at scale.